Ever since people realized that there was valuable traffic to be sourced from search engines, a perpetual game of cat and mouse had ensued between webmasters/SEOs and search engines (namely Google). The Big G makes tweaks to clean up its results pages and establish better quality metrics, and SEOs then find ways to game those metrics and tap into the sweet traffic nectar that Google provides.

For a long while this relationship was very much tit for tat. Google would spot a problem (or loophole) in its SERPS, make a change to rectify that problem, and then SEO’s would spend some time figuring out the change and develop a work-around. Here are a couple of examples:

Vince update...

In a nutshell the Google Vince update was aimed at giving greater value to big “brands” that Google felt it could trust not to spam users and would offer high quality content. As a result of this update a large number of sites in competitive verticals lost out as they didn’t seem to attract “brand” signals and so were overtaken by bigger names. It took a little while for SEO’s to figure out what Google’s brand signals were, but it eventually transpired that things such as brand citations, brand anchor text links and brand searches all played their part in the new algo tweak. The best SEOs were able to engender these signals and began improving their rankings.

Mayday update...

Google’s Mayday update aimed at improving the quality of long tail search results and making it harder for it to be gamed by website owners and marketers. Large volumes of traffic fell away for many websites as their large volumes of content failed to bring the long tail rankings it once did. However, after a bit of trial and error SEO’s realized that if they enhanced their internal linking structure, updated their low-level pages with fresh content and diverted more of their efforts to building external deep links to those pages, much of their lost traffic could return.

There are of course hundreds of changes to Google’s algorithm every year, but most of them are unnoticeable. The big changes that did have a more overt impact only happened one at a time, which crucially limited the variables and gave SEO’s lots of time to react. That was until...

Google Panda update...

Fed up of releasing large updates only for them to be gamed but a few months later, Google decided to change tact. The Panda update was like nothing they had ever done before and it had two big differences that were crucial to its success.

1.) It was a multi-faceted, multi-variable update.

This update did not just look at one element of SEO, it looked at many. It addressed linking, onsite optimization, user metrics, search data, content, social signals, and probably a few more. Additionally, it did not just look at one factor of each element, but instead analyzed each part individually and then as whole to determine if a site should be “trusted” or whether it adds “worth” to users. Here are some of the factors that could influence whether or not you got a Panda slap, which I’ve gathered from many blogs and forums over the past months:

- Having a large amount of duplicate content, either internally or cross-site

- Having thin content on multiple pages

- Having little or no unique content in terms of topics covered

- Having duplicate content under multiple "tags" within a blog

- Excessive Adsense or banner advertising on multiple pages

- Excessive on-page keyword targeting

- Overly aggressive link building

- Over-targeting of keyword anchor text

- Large percentage of back link profile from low quality sites

- High bounce rates for site

- Low average time on page/site

- Low SERPS click through rates

- Low level of brand/domain name searches taking place

- Low activity in social sharing networks (Facebook ‘likes’, Twitter ‘tweets’)

Some of these may be more accurate than others, but what is clear is just how many issues people believe influenced the Panda update. By vastly increasingly the variables that went into the algo change it is exceptionally more difficult to identify which one or more of these caused your site to drop, and trial and error testing now takes a great deal more time and effort. But Google didn’t stop there, oh no, they decided that even if you could go through and try changing one thing at a time, they would add another spanner in the works with the next important update attribute -

2.) The algorithms need to be re-run before any changes you make take effect.

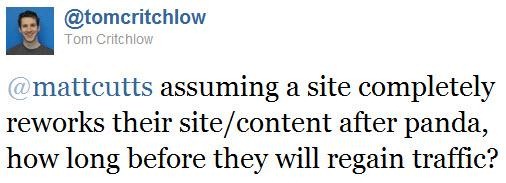

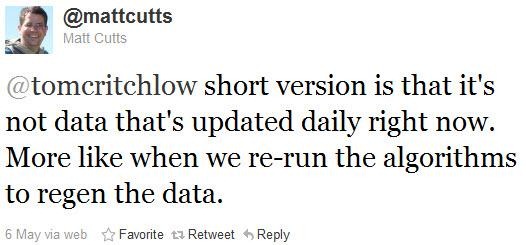

A recent conversation between Tom Critchlow and Matt Cutts on Twitter went like this:

Now, like most SEOs I don’t believe everything Mr Cutts says, but this would certainly account for why we haven’t seen many sites recover from the Panda update after making extensive onsite changes, and it would be a master stroke from Google you have to admit.

Let's say for example that you suspect you got a Panda slap for having too much advertising above the fold compared to the amount of unique content. You decide to reduce your banner slots right down or remove them altogether, and then you wait. With previous updates you probably only had to wait for your pages to get re-crawled and you’d find out if your change worked soon after. If it didn’t you could move on any try something else. With Panda however you could make a change and have to wait 6 months (or more) before you found out what impact it had due to the algo not running in real-time. Imagine if you had 10 variables to test, that could take you 5+ years!

When pushed for advice on how to overcome the update, Google has basically just pointed back to its long-standing guidelines surrounding quality content. Many SEO’s and webmasters have therefore had no choice but to make all the changes Google has long been asking for, in the hope that once the algorithms are re-run the Panda will have mercy on them.