From my experience it is necessary to have a 'campaign' tool, which will keep track of the websites you manage, ranking reports, crawl diagnosis, traffic, link analysis, social media etc... Since my campaign tool of choice is SEOmoz (PRO), I wanted to know if I could also use it for link prospecting, without having to shell out for a more specific tool.

Whatever kind of link building tactic you prefer (and whichever color hat you wear) the process of link prospecting is unavoidable. Although the first port of call for many SEOs is to examine a competitor's backlink profile; at some stage you will need to find link partners of your own.

General Workflow

Most link building methods require link prospecting and outreach, and generally follow a similar pattern:

1. Creation of a long list of link targets

2. Reduce this to a short list based on defined criteria (e.g. Domain Authority)

3. Qualify the websites for suitability/relevancy

4. Develop pitch(es) based on target persona

5. Pitch and follow up

Various levels of this workflow can be automated, however it is very easy to fall into the trap of saving time by automating one stage of the process, only to find that the next stage has been lengthened as a result (E.g. poor targeting on stage 1 that makes stages 2 and 3 a mammoth job).

- Read also about Debra Mastaler's linkbuilding workflow

In my opinion, for the most effective outreach you should not look to automate the pitching process. Even if you use templated e-mail solutions (such as ToutApp) there should still be a level of manual customization. Similarly, in order to accurately qualify a website's suitability to your proposal it is almost always necessary to check it manually yourself. As such, it is stages 1 and 2 that I normally look to automate; and to automate them in a manner that does not make stage 3 a complete nightmare!

There are a range of paid tools which will help with the link prospecting phase. Ontolo is useful if you are running a range of campaigns as you can set it to find prospects based on your criteria, and it will run in the background or overnight which can free up your time. On the downside, since you do not see your results in realtime, the lag can mean that you don't realise that your query was too broad (or too narrow) and as such can actually waste valuable time. Citation Labs offer a range of tools which are good at gathering specific pieces of information - they are very reasonably priced but you normally have to pull data together yourself afterwards. Paying for all these tools put a lot of pressure on your budget, so let's see if we can get by with only the SEOmoz tools at hand...

Example: Link Building Using Food Bloggers

In this example, we will be looking for food bloggers that we could send a sample for review on their site (sample could be a sample tasting pack, a food related product, etc...). With product reviews, bloggers naturally link back to the origin site, and it can be a good way to get deep links to your products.

Step 1: Finding Prospects On Google

To pull decent data from Google, you will need to have some experience with Google Search Operators. There are plenty of fantastic resources online that go into great detail about all the various options, but this one is probably the most useful. A lot of people will claim you need to be vastly experienced with search operators in order to prospect through Google, but that is not really true - you just need a bit of creativity and a willingness to experiment.

Whenever I begin this process, it always helps to start with the broadest terms, see what patterns arise and narrow it down from there. So to begin with we will try, simply,

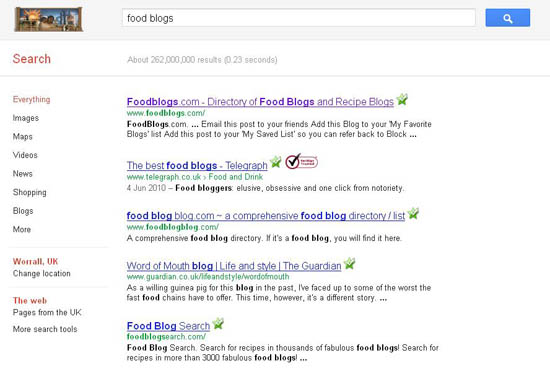

Search 1: Food Blogs

The first few results show us some curated lists where other people have done the hard work for us. Excellent - grab as many relevant URLs as you can from these curated lists, and even consider some related searches (e.g. 'top 10 food blogs').

In particular, however, we are looking for food bloggers that might be interested in doing a product review - and normally good evidence of this is if they have done a review before. Also, we want to reduce shipping costs so we will only aim for UK sites by adding in the site operator.

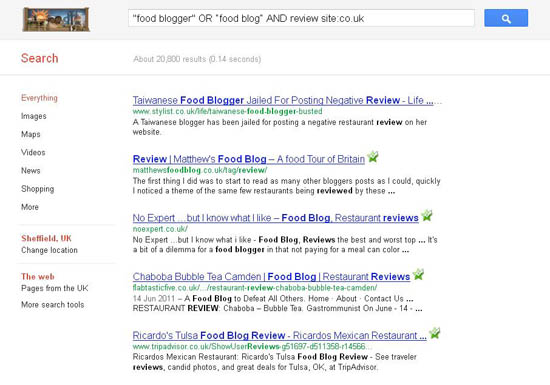

Search 2: "food blogger" OR "food blog" AND review site:co.uk

Hmmm, not bad, but we seem to have quite a few restaurant reviews. We can fix that by adding some negative matches. Scrolling through the results I also notice some 'big' websites along with a few other sites cropping up regularly, so these can come out as well:

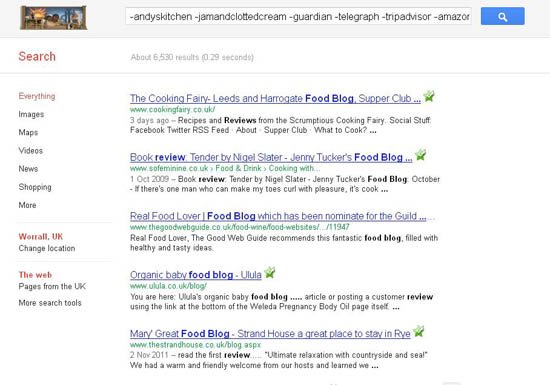

Search 3: "food blogger" OR "food blog" AND review site:co.uk -restaurant -andyskitchen -jamandclottedcream -guardian -telegraph -tripadvisor amazon

Ok, our results now look fairly relevant, so we'll keep these.

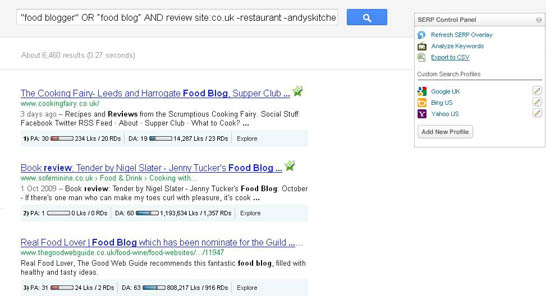

Step 2: Export To CSV Using The Mozbar

When I am working with any kind of large dataset, I always look to get the data into Excel and then manipulate it from there. Fortunately, the Firefox MozBar extension offers the handy feature to export your results to CSV (with the SERPS overlay switched on). This will export the URL, in addition to the Page Authority, Domain Authority and linking data. With a normal search, this will only give you 10 results per page, so go to Google Search Preferences, switch Instant off and change your results per page to 100. Now you can run 10 exports (pages 1-10) to get 1000 results to analyze. Make sure you wait for the SERPs overlay to finish updating each record before you export or you will get incomplete results.

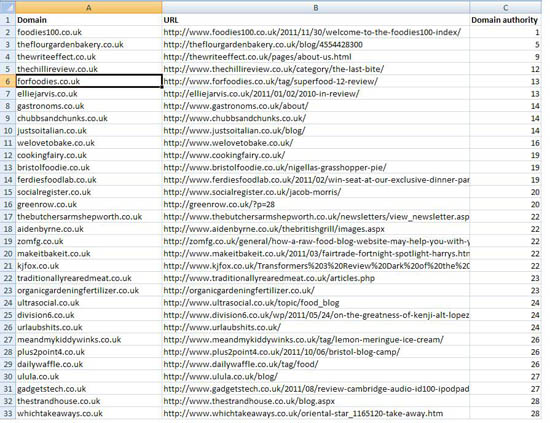

Step 3: Use Excel to create a shortlist

Once you have pulled your 10 exports into one master spreadsheet, you will need to remove the duplicates (remember to add back in your 'removed' results and curated lists). This can be done by extracting the domain/subdomain using Find/Replace along with Text to Columns - then remove duplicates based on this field. Then you will need to decide which metric is most important to you, and remove results based on this parameter.

In our example we are looking for blogs, so any new blog post that is written will have a page authority of 1, so PA is not all that useful to us. Instead we can sort by DA and then examine the extremes. Often the very high DA sites will be unachievable and not worth pitching to; in this example we have pages from the BBC, Google and The Independent. I can't see them taking a product sample so we scrub them from the list. Similarly you will need to decide upon a threshold for the low DA sites; are they worth your while? If not, remove them from your list. In this case we'll take off anything with a DA less than 30, leaving a set of target sites with domain authority between 30 and mid-80s.

Depending on your search query, this should leave you with around 100-200 targets to qualify. You will still need to go through and make manual decisions about the suitability/relevancy of the site to your campaign, but hopefully you are qualifying a couple of hundred websites, rather than a couple of thousand.

Note: If you want to examine other metrics that are not pulled through by the MozBar, experiment with the SEOmoz API. The free version gives you plenty of data, and you can quite easily build a spreadsheet in Excel that will make API calls based on a list of domains, by following this great post on SEO Gadget.

Conclusion

And that's that! Using only the SEOmoz toolset, we have successfully created a targeted list of link prospects to qualify and pitch to. I would also recommend running your finished list through the Contact Finder tool from Citation Labs (you can try it for the cost of a tweet!), which will go off and find you contact details to save further time. You will still have the laborious task of qualifying and pitching to all your contacts, which will normally take several days, but hopefully the links will be worth it.

If anyone has any other inventive ways of link prospecting, please feel free to share them in the comments below.

Follow-up reading: