Managing large sites is challenging. There is a lot to deal with, from the technical quirks of the content management system, managing crawl paths to the content you want to promote, without overly enthusiastic use of nofollow/noindex. There is legacy content to consider and in the case of some sites, duplicate content and pagination problems. In all this, it is very easy to just focus on what already works. Most reporting tools SEO's deal with daily report on what already has traffic. And in most cases, rightly so.

Does it Even Matter?

However, what about the content that does not get traffic? Does it even matter if these pages disappear into the back end of the sitemap never to be seen in Google Analytics at all? Here is a hint, it can matter. There are a number of reasons that a page that once got traffic no longer does, and most of these are fixable. Just as you need to be aware of pages that are still to perform. Every page is a potential landing page, and assuming it contains content, it can rank for something.

Unfortunately a lot of the tools online marketing professionals rely on are not up to this task. These include:

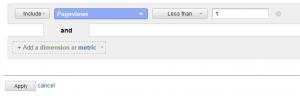

- Google Analytics does not report on 0 pageview pages.

- Webalizer does not report on 0 hit pages.

- Sitemaps may not list the entire site

- The site: operator can be inaccurate and return pages no longer there

- Google Webmaster Tools only reports on a limited collection of pages.

- Bing Webmaster Tools has the same issue as the Google version, only more so.

Enter the Spider

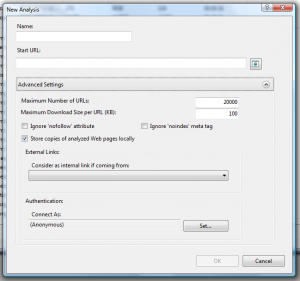

Unfortunately, since some tools only report on what gets traffic and others only returns limited and sometimes inaccurate information, you will just have to crawl the website yourself. Personally I use the SEO Tool Kit available on IIS 7, but there are other tools available such as Screaming Frog. This method requires a little more effort than just checking Google Analytics, but it is easy to do, and the process can be very simple to follow.

Crawl, Query and Export

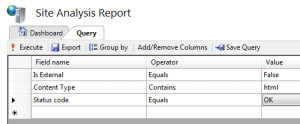

First you will need to crawl the website, ignoring nofollow and noindex as appropriate. Once the crawl is complete, you can use the SEO Tool Kit's query tool. This tool is very flexible, and can be very powerful for assessing onsite technical and structural issues. In this example though, all that is required is a list of pages, excluding those external to the site and returning any status code other than OK.

The list can be exported as a CSV from here, ready for the next step.

Comparing Data

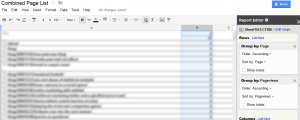

With a list of all pages receiving traffic for the time period of interest from Google Analytics and the full list of crawlable URLs from SEO Tool Kit, it is a simple matter now to combine the two lists. In this case, take the URL list from the Tool Kit, and the CSV export of the page report from Google Analytics, and append the URL list to the bottom. Ensure that the format of the pages from both tools match, cleaning out text such as the domain name as needed.

Of Spreadsheets and Pivot Charts

Once this list is complete, create a pivot chart with the pages as the row and pageviews/metric of your choice in the data area. Excel will ignore duplications of the pages and return a list of all the site's content, with and without activity data.

While there are pivot charts available in the Google Docs Spreadsheet, the output is messier than it is from Excel. Unlike Excel, the duplicate pages are not removed, only grouped. The information is still there and it can be exported as a CSV.

Finding pages with no traffic is not hard. There is a little work involved, and it does require a few tools, but using the data to create the information you require is not all that difficult. Like so many other things in this industry, you just need to do the work.

(If you liked this post, you might enjoy This Is How You Easily Setup Actionable Google Analytics Dashboards )