Last time I showed you how to use Excel to reveal a sites directory structure. Today Excel is going to show you how many levels deep your URLs go " even if those levels are URL parameters.

Last time I showed you how to use Excel to reveal a sites directory structure. Today Excel is going to show you how many levels deep your URLs go " even if those levels are URL parameters.

Why?

Oversimplified: the more shallow, simple and clean a URL is, the better.

Every extra https://example.com/directory/and?url_parameter adds another level of depth to a site that can potentially waste a search engine spiders time on your site.

Finding out how deep URLs go is as easy as counting directories by counting /s and &s.

Heres how you do that automatically with Excel.

Get A List Of URLs

Get A List Of URLs

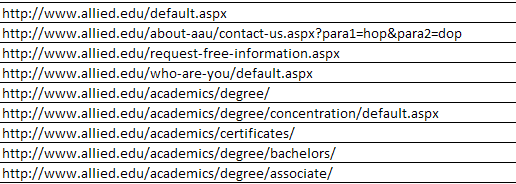

Get your list of URLs by crawling the site, scraping Google, getting a sitemap " anything.

Most of the time Excel can import just about anything and show it in a pretty structured manner.

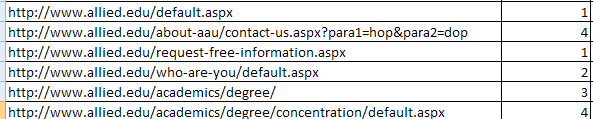

Like last time, Im using the sitemap of Allied American University only Ive added a few fake URL query parameters.

Insert Directory Depth Formula

Insert Directory Depth Formula

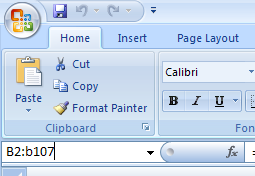

With your URLs in column A, heres the formula I insert to have Excel count directory depth:

=SUM(LEN(A2)-LEN(SUBSTITUTE(A2,"/","")))/LEN("/")+SUM(LEN(A2)-LEN(SUBSTITUTE(A2,"=","")))/LEN("=")-2

My data always has headers so the first URL is at line 2.

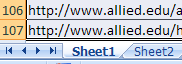

To repeat the formula for the other URLs, figure out how many URL lines there are. Easy way in Excel to go to the last line in a column is to put your cursor in the first cell, then press CTRL + SHIFT + cursor down.

Fill in: B2:B107 (in my case you might have more or less URLs). This of course presumes your URL depth information is in column B.

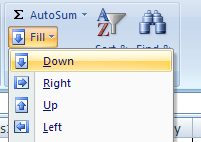

From the Home tab, click Fill and choose Fill Down.

Done

Done

We can now see that /default.aspx is one step away from the domain or that /academics/degree/concentration/default.aspx is 4.

Just as easily we can see that /about-aau/contact-us.aspx?para1=hop¶2=dop is, due to its URL parameters, also 4 deep.

From here on we could do things like

- sort the URLs by URL depth

- highlight all URLs that are at or after an arbitrary level

5 Comments

Comments are closed.

Get A List Of URLs

Get A List Of URLs

Insert Directory Depth Formula

Insert Directory Depth Formula

Done

Done

Great! Thanks a lot for this trick, I’ve never thought that url depth was so important. I’ll use this formula to check the depth of the backlinks I get.

Good post and fantastic tricks to know the depth of URL so easily. Blogger can use this trick to know the backlinks depth.

.-= mra recently posted: How To Increase Traffic To Your Site =-.

I think it’s not directory depth Google follows, It is all about how the pages are being linked. Think of it as a google PR, The path depth is not important to Google. Usually sites get the most links to the homepage, and from the homepage the PR is spread through the site. In some cases a lower page has a higher PR than the homepage. That’s how many clicks away from the home page that determines the lessening of the PR. Furthermore, almost every single page for allied.edu is a couple of links away from the home page. So, I don’t think the page depth for this case shouldn’t be any problems.

I agree with well – the number of directories in a URL doesn’t automatically equate to click depth (although I’ll be stealing your nifty Excel function for other purposes!). I use Xenu Link Checker to determine click depth as it’s conveniently returned in its crawl report.

You’re right, Well — and Aidan: number of directories or URL parameters doesn’t necessarily equate click-depth. It is a reasonable approximative measure though.

Re. Allied.edu: I’m just using that site as an example in my Excel posts as we nor they stand anything to gain from the exposure 🙂

Aidan — nice use of Xenu!