Yes... I'm going there. That old chestnut - Google's duplicate content penalty. Some have argued that the penalty simply doesn't exist. And while I tend to agree that a penalty probably doesn't exist for individual pieces of duplicated content, I have never doubted that duplicated domains were a one-way ticket to a Google butt kick. Because duplicated domains make a mockery of the quality of Google's search results. And everyone knows Google doesn't like looking stupid. Which means they probably won't like this article, because it showcases a pretty serious case of undetected duplicate domains.

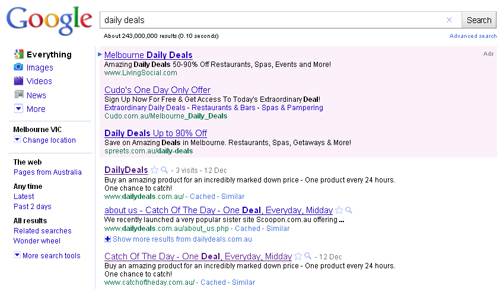

I came across the scenario recently while researching the daily deal market in Australia. If there were any markets I'd expect Google to be on top of right now, it's the daily deal market. You know, cause of the whole Groupon acquisition bid and all... While the Aussie market might not be the highest of their priorities, it's still an industry churning mega bucks down here. Which makes the SERP manipulation all the more fascinating . Here's a look at what the SERP looks like for the search term 'daily deals' in Google Australia:

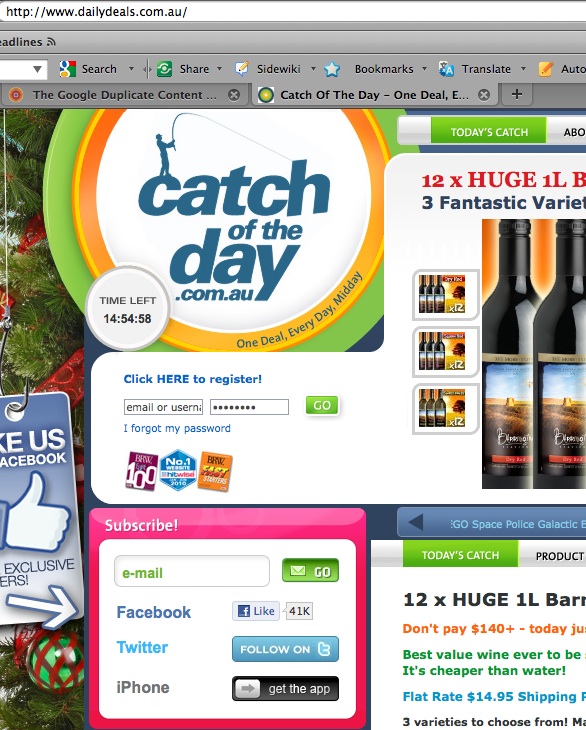

To the naked eye it doesn't appear too sinister at first. Until you start clicking on the links. Notice that the first two results within the organic results are for a site called Daily Deals. No great surprise there given Google's penchant of favouring sites with an exact match within the domain. However, things started to look fishy as I clicked through to the Daily Deals web site. Here's what it looks like:

Notice the 'Catch of the Day' branding. That's where the problem starts. Catch of the Day happens to be one of the largest daily deals web sites in Australia. And the Daily Deals web site is a perfect replica of Catch of the Day. Where it becomes really interesting is if you return to the original SERP. Notice Catch of the Day listed in third place for daily deals search term. Which means Catch of the Day has monopolised the top three positions for the 'daily deals' keyword, which as you would understand, is a damn lucrative one. Google's own keyword tool estimates that it receives almost 50,000 Australian searches a month, along with over 33,000 for 'daily deal', which it also ranks first for.

Now... this is certainly the most high profile duplicate domain example I'd ever come across. But I thought I'd return to Google's own guidelines to ensure I hadn't misunderstood the duplicate content issue. Here's what they say on the matter:

"Duplicate content on a site is not grounds for action on that site unless it appears that the intent of the duplicate content is to be deceptive and manipulate search engine results. If your site suffers from duplicate content issues, and you don't follow the advice listed above, we do a good job of choosing a version of the content to show in our search results."

While it's perfectly feasible that Catch of the Day are unaware of Google's guidelines on the matter, I'd be surprised if they haven't done this to manipulate search results. Malicious or not, the breach exists and Google hasn't detected it, which is significant when you consider the strength of signals Google has relating to the Catch of the Day web site:

- Almost 7,000 pages indexed by Google

- Over 3,500 inbound links to the domain

- A domain age dating back to 2006

- An Alexa traffic rank just outside the top 10,000 globally

- Almost 41,000 fans on Facebook

Catch of the Day should most definitely be on their radar. Now consider the clues to Google that Daily Deals in a perfect replica:

- The sites maintain an identical meta description

- The sites feature exactly the same product, with identical content each day

- And here's the clincher... if you click on any navigation item within the Daily Deals site, you be redirected to the Catch of the Day domain

Doesn't take a genius to figure out what's going on does it? And the clues are clearly there. Yet Google has missed the boat. Which has me seriously reconsidering Google's capability to detect duplicate content effectively. What do you think... is this just an anomaly or is Google ability to effectively filter duplicate content seriously overstated?

5 Comments

Comments are closed.

I agree – Google’s handling of cross-domain duplication seems shockingly inconsistent, and bigger brands seem to get away with more. I wonder if, now that Google allows one domain to have more than one result/page (without indentation), they’re treating this as one domain but allowing it anyway. They seem to be changing gears fast in that department.

Also, just an aside, but I’ve seen duplicate content within a site have huge implications for indexation and ranking. I think where we get caught up is the semantics of the word “penalty”. Onsite duplication isn’t going to cause a Capital-P penalty in Google’s use of the word, but the difference between a “penalty” and a “filter” feels pretty moot if you suddenly stop ranking.

Interesting thought Pete. My suspicion is that if they were treating it as the one domain, the Catch of the Day site would have to appear first, as its overall ranking signals are far stronger (aside from the domain name).

.-= James Duthie recently posted: Is Google’s duplicate content penalty an SEO fairy tale =-.

@James – That’s a good point. If there were some sort of amalgamation at work, the strongest domain would probably become the equivalent of the “home” page. It’ll be interesting to see how this shakes out. Honestly, I hope Google slows down a bit. I worry too many of their changes in 2010 were reactions to Bing/Yahoo and not enough were really thought through.

I’m not seeing the same results now: only one listing for dailydeals.com.au and nothing for catchoftheday.com.au on page 1. However, I do see allthedeals.com.au has 2 pages listed.

I’ve lost count of the times I’ve had to re-educate on the ‘duplicate content penalty’ and explain there is no penalty, but there is a risk of de-duplication for certain query terms.